Overcoming Quantum Error: International Research Team Pushes Frontiers of Quantum Computing Simulation

Have you noticed that no one seems to talk about clock rate anymore? Instead of boosting speed at the microprocessor level, companies are packing more cores on a chip and more blades in a server, adding density to make up for the fact that, in the current paradigm, transistors cannot operate much faster.

This leveling of the speed curve has led many to pursue alternative models of computation, foremost among them quantum computing. Practically speaking, the field is in its infancy, with a wide range of possible implementations. Theoretically, however, the solutions share the same goal: using the quantum nature of matter — its ability to be in more than one state at once, called superposition — to speed up computations a million-fold or more.

Where typical computers represent information as 0s and 1s, quantum computers use 0, 1 and all possible superpositions to perform computations.

“Instead of basing your calculation on classical bits, now you can use all kinds of quantum mechanical phenomena, such as superposition and entanglement to perform computations,” said Helmut Katzgraber, professor of physics at Texas A&M University. “By exploiting these quantum effects, you can, in principle, massively outperform standard computers.”

Problems that are currently out of reach, such as simulating blood flow through the entire body, designing patient-specific drugs, decrypting data or simulating complex materials and advanced quantum systems, would suddenly be graspable. This tantalizing possibility drives researchers to explore quantum computers.

The behavior of quantum particles is difficult to see or test in the traditional sense, so the primary ways scientists probe quantum systems is through computer simulations.

Studying the theoretical stability of a quantum computer via computer simulations is exceptionally difficult. However, an international team of researchers coming from diverse fields of physics found common ground on a new way of determining the error tolerance of so-called topological quantum computers using high-performance computing.

The approach involves modeling the interactions of a class of exotic but well-known materials known as spin glasses. These materials have been shown to correlate to the stability of a topologically-protected quantum computer. By simulating these spin-glass-like systems on the Ranger and Lonestar4 supercomputers at the Texas Advanced Computing Center (TACC), the team has been able to explore the error threshold for topological quantum computers — a practically important aspect of these systems.

“It’s an inherent property of quantum mechanics that if you prepare a state, it is not going to stay put,” Katzgraber said. “Building a device that is precise enough to do the computations with little error, and then stays put long enough to do enough computations before losing the information, is very difficult.”

Katzgraber’s work focuses on understanding how many physical bits can ‘break’ before the system stops working. “We calculate the error threshold, an important figure of merit to tell you how good and how stable your quantum computer could be,” he explained. Katzgraber and his colleagues have shown that the topological design can sustain a 10 percent error rate and even higher, a value considerably larger than for traditional implementations.

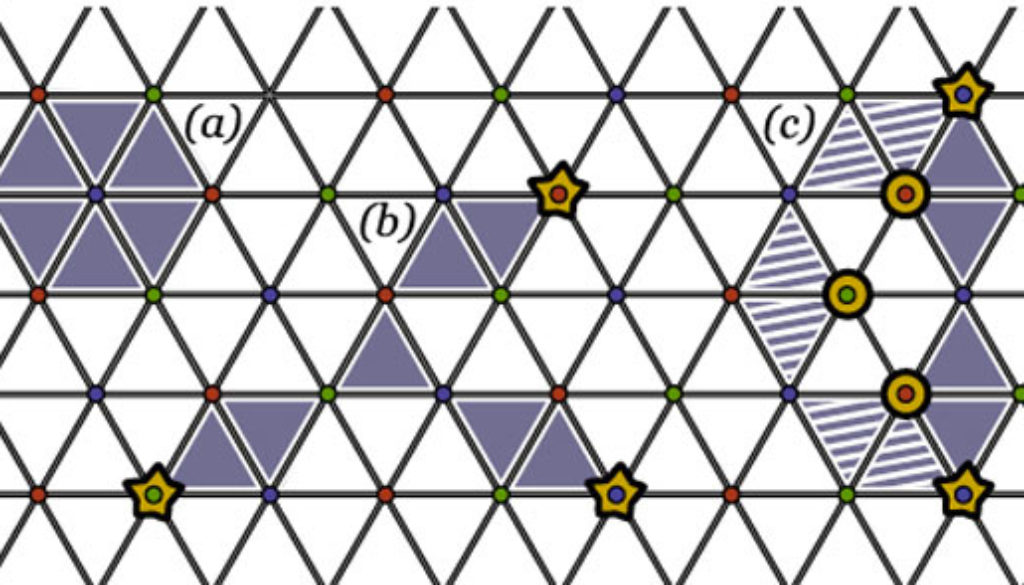

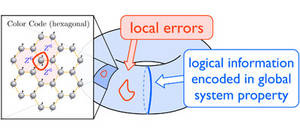

So what is a topological quantum computer? Katzgraber likens the topological design to “a donut with a string through it.” The string can shift around, but it requires a good deal of energy to break through the donut or to cut the string. A 0 state can then be encoded into the system when the string passes through the donut; a 1 when it is outside the donut. As long as the donut or string do not break, the encoded information is protected against any external influences, i.e., wiggling of the string.

In the topological model, one uses many physical bits to build a logical bit. In exchange, the logical bit is protected against outside influences like decoherence. In this way, the system acts much like a DVD reader, encoding information at multiple points within the topology to prevent errors.

“It’s a quantum mechanical version of a 0 and 1 that is protected to external influences,” Katzgraber said.

The amount of error a topological quantum computing system can sustain corresponds to how many interactions in the underlying spin glass can be frustrated before the material stops being ferromagnetic. This insight informs the scientists about the stability potential of topological quantum computing systems.

“Topological codes are of great interest because they only require local operations for error correction and still provide a threshold,” states Hector Bombin, a research collaborator at the Perimeter Institute in Canada. “In addition, they have demonstrated an extraordinary flexibility. In particular, their topological content allows one to introduce naturally several ways of computing and correcting errors that are specific to these class of codes.”

According to Katzgraber, the non-intuitive approach to quantum simulations was driven by the unique nature of the collaboration between his group at Texas A&M University and ETH Zurich (Switzerland), as well as collaborators at the Perimeter Institute and the Universidad Complutense de Madrid in Spain.

“You have so many disparate fields of physics coming together on this one problem,” Katzgraber said. “You have the statistical mechanics of disordered systems; quantum computation; and lattice gauge theories from high-energy physics. All three are completely perpendicular to each other, but the glue that brings them together is high performance computing.”

On TACC’s high-performance computing systems, Katzgraber simulated systems of more than a 1,000 particles, and then repeated the calculations tens of thousands of times to statistically validate the virtual experiment. Each set of simulations required hundreds of thousands of processor hours, and all together, Katzgraber used more than two million processor hours for his intensive calculations. Results of the simulations were published in Physical Review Letters, Physical Review A and Physical Review E, and are inspiring further study.

Even as trailblazing companies fabricate the first quantum computing systems, many basic questions remain — most notably how to read in and read out information without error. The majority of people in the field believe a prototype that significantly outperforms silicon at room temperature is decades away. Nonetheless, the insights drawn from computational simulations like Katzgraber’s are playing a key role in the conceptual development of what may be the next leap forward.

“We’re going to make sure that one day we’ll build computers that do not have the limits of Moore’s law,” said Katzgraber. “With that, we’ll be able to do things that right now we’re dreaming about.”

For additional information, visit the research page of the Texas A&M Computational Physics Group.

#########

The Ranger supercomputer is funded through the National Science Foundation (NSF) Office of Cyberinfrastructure “Path to Petascale” program. The system is a collaboration among the Texas Advanced Computing Center (TACC), The University of Texas at Austin’s Institute for Computational Engineering and Science (ICES), Sun Microsystems, Advanced Micro Devices, Arizona State University and Cornell University. The Ranger and Lonestar supercomputers, and the Spur HPC visualization resource, are key systems of the NSF TeraGrid, a nationwide network of academic HPC centers, sponsored by the NSF Office of Cyberinfrastructure, which provides scientists and researchers access to large-scale computing, networking, data-analysis and visualization resources and expertise.

-aTm-

Contact: Shana K. Hutchins, (979) 862-1237 or shutchins@science.tamu.edu or Helmut G. Katzgraber, (979) 845-2590 or hgk@tamu.edu

The post Overcoming Quantum Error: International Research Team Pushes Frontiers of Quantum Computing Simulation appeared first on Texas A&M College of Science.